Ad Fraud 101: How to Identify and Protect Your Budget from Invalid Traffic.

Ad Fraud 101: How to Identify and Protect Your Budget from Invalid Traffic

Introduction: The $172 Billion Leak in Digital Advertising

Imagine running a marathon, only to discover at the finish line that nearly one in every five miles you ran was on a treadmill—going nowhere, burning resources, and producing no progress. This is the daily reality for digital advertisers in 2026.

Ad fraud has evolved from a niche concern to a systemic industry challenge that drains an estimated $172 billion annually by 2028, up from $88 billion in 2023 . Recent analysis of over 2.7 billion paid ad clicks across major platforms reveals that 8.51% of all paid traffic is invalid, quietly eroding return on ad spend for businesses of all sizes . For advertisers targeting 3–4x ROAS, even these seemingly modest fraud rates translate into millions in lost revenue opportunity .

This guide provides a comprehensive introduction to ad fraud—what it is, how it works, and most importantly, how to identify and protect your advertising budget from invalid traffic. Whether you’re running campaigns on Google, Meta, TikTok, or any other platform, understanding these fundamentals is no longer optional; it’s essential for survival in modern digital advertising.

Part 1: What Is Ad Fraud? Defining the Problem

1.1 The Core Definition

Ad fraud is any deliberate activity that prevents the legitimate delivery of digital advertisements to real human users . It encompasses a wide range of tactics designed to steal advertising budgets, inflate metrics, and distort campaign performance data .

At its simplest, ad fraud means paying for advertising results that never happened. A click that came from a bot, an impression that was never actually viewable, a form submission generated by malware—all represent fraud that consumes budget without delivering business value.

1.2 The Scale of the Problem

The numbers are staggering and worth internalizing:

| Metric | Value |

|---|---|

| Global ad fraud losses (2026 projection) | $80–100+ billion annually |

| Projected losses by 2028 | $172 billion |

| Average IVT rate across campaigns | ~11% (roughly 1 in 9 clicks invalid) |

| Total impressions from bots | 14–15% of all delivered impressions |

| TikTok’s invalid traffic rate | 24.2% |

| LinkedIn’s invalid traffic rate | 19.88% |

| Google Ads search IVT rate | 7.57% |

| Lead-gen industry fraud exposure | 32% higher than e-commerce |

These figures represent more than abstract statistics. For the average advertiser, more than one in every seven ads may not be viewed by humans . Up to 20% of total internet traffic is estimated to be generated by botnets capable of committing ad fraud .

1.3 GIVT vs. SIVT: Understanding the Fraud Spectrum

Not all invalid traffic is created equal. Industry standards distinguish between two categories:

General Invalid Traffic (GIVT) includes known bots, crawlers, and non-human traffic that can be identified through standard detection methods . This includes search engine crawlers, known bot IP addresses, and simple automated scripts. GIVT is relatively easy to filter using basic detection tools.

Sophisticated Invalid Traffic (SIVT) involves advanced fraud techniques that require sophisticated detection capabilities . This includes malware-driven traffic, hijacked devices, coordinated human fraud farms, and—increasingly—AI-powered fraud that mimics human behavior patterns to evade detection .

The distinction matters because GIVT can often be caught by platform-level filters, while SIVT requires dedicated, layered protection strategies.

Part 2: The Major Types of Ad Fraud

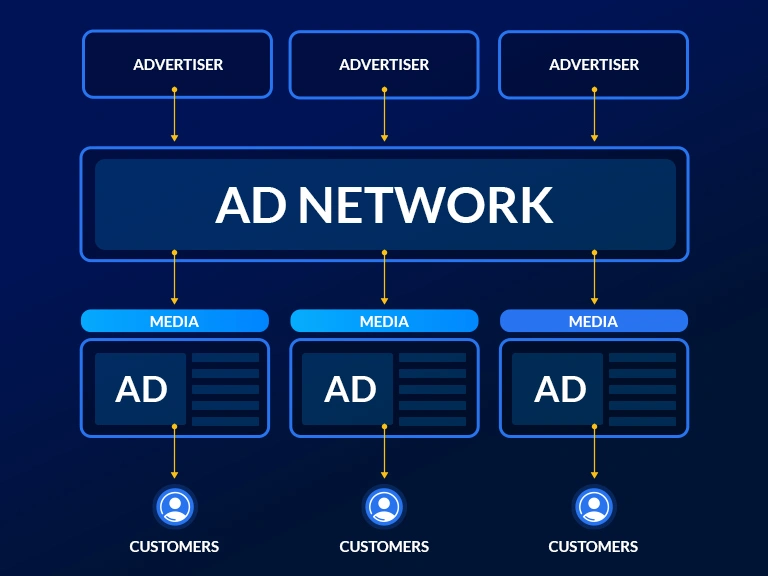

Ad fraud manifests in numerous forms, each targeting different parts of the advertising ecosystem.

2.1 Bot Traffic and Click Fraud

Automated scripts and botnets generate fake clicks and impressions at scale . Simple bots are easily recognizable, but modern variants are increasingly sophisticated—capable of executing JavaScript, rotating IP addresses, and mimicking human browsing patterns .

Click farms employ large groups of low-paid workers to manually click ads, install apps, or perform basic interactions . This type of fraud includes human behavior and cannot be easily identified by software systems or rule-based detection .

The Android malware threat: A newly documented Android malware family infects devices through seemingly legitimate apps, then runs in the background to load ad content in hidden views and trigger automated clicks . The malware can communicate with remote command servers to receive updated fraud instructions, open web pages in hidden views, and generate fake impressions and engagement metrics that appear normal in analytics dashboards . For advertisers, the most worrying aspect is that the malware’s primary objective is to monetize bogus traffic at scale, directly funded by advertiser CPC and CPM budgets .

2.2 Impression Fraud

Not all fraud involves clicks. Impression fraud tricks advertisers into paying for ads that were never actually seen.

Pixel stuffing involves serving ads in 1×1 pixel frames, invisible to users but countable as impressions .

Ad stacking layers multiple ads in a single placement, so only the top ad is visible while all count as impressions .

Placement fraud delivers ads in low-quality or invisible positions where users never actually view them.

2.3 Domain Spoofing

Fraudsters forge bid requests to make low-quality inventory appear as premium publisher placements . Advertisers end up paying premium CPMs for what they believe is inventory on sites like The New York Times or The Wall Street Journal—when in reality, the ads are running on obscure, low-quality domains.

Ads.txt (Authorized Digital Sellers) helps combat this by allowing publishers to declare authorized sellers, but it is not sufficient alone .

2.4 Device and Geo Spoofing

Attackers mask true device characteristics and geographic locations to bypass targeting restrictions and appear as high-value audiences . A campaign targeting New York professionals might instead serve to a server farm in Eastern Europe, with the traffic manipulated to appear as if it originated from Manhattan iPhones.

2.5 Mobile Proxy Abuse

This rapidly growing fraud vector routes fraudulent traffic through residential and mobile IP addresses to evade data center detection . Because the traffic appears to come from real mobile devices—often infected with malware—it is much harder to distinguish from legitimate users.

2.6 CTV and OTT Fraud

Connected TV’s high CPMs make it an attractive target for fraudsters . Common techniques include SSAI (server-side ad insertion) manipulation, device ID spoofing, and fake streaming inventory .

2.7 Install Fraud and Attribution Poisoning

Install fraud generates fake app installs and in-app events without actually installing applications . This targets mobile app install campaigns and includes click injection and attribution fraud, where fraudsters claim credit for organic installs by injecting clicks just before installation occurs.

2.8 Malvertising: When the Ad Itself Is the Threat

Beyond wasting budget, some fraudulent ads actively harm users. Malvertising (malicious advertising) has surged to become the single largest threat to individuals, accounting for 41% of all cyberattacks according to Gen telemetry .

A landmark study by Gen Threat Labs analyzed 14.5 million ads running on Meta platforms across the EU and UK over 23 days, representing more than 10.7 billion impressions . The findings were alarming: nearly one in three ads (30.99%) pointed to a scam, phishing, or malware link . These scam ads generated more than 300 million impressions in less than a month .

The activity was highly concentrated, with just 10 advertisers responsible for over 56% of all observed scam ads . Repeated campaign clusters were traced to shared payment and infrastructure linked to China and Hong Kong, indicating organized, industrial-scale operations rather than isolated bad actors .

Modern malvertising doesn’t look suspicious—it looks professional, familiar, and precisely targeted . Attackers use “scam-yourself” techniques like FakeCaptcha and ClickFix, where victims are nudged into doing the attacker’s work for them—approving browser prompts, enabling push notifications, or copying and pasting commands .

Part 3: How to Identify Ad Fraud in Your Campaigns

Detection is the first line of defense. Here are the key signals that may indicate invalid traffic in your campaigns.

3.1 Behavioral and Engagement Signals

Bots behave differently from humans in quantifiable ways :

| Signal | What to Look For |

|---|---|

| Click velocity | Unusually high click rates from specific sources or time periods |

| Engagement duration | Very short session times, high bounce rates, zero engagement |

| Conversion timing | Surge of conversions clustered in unnatural intervals post-click |

| Repetition patterns | Repeated clicks from same devices or IP ranges without conversions |

3.2 Technical and Infrastructure Signals

IP and network anomalies: Traffic concentrated from hosting providers, data centers, or unusually “clean” consumer ISPs at odd hours . Traffic originating from data centers or cloud hosting providers often indicates bot activity .

Device fingerprinting mismatches: Unusual combinations of operating systems and browsers, massive numbers of matching device fingerprints, or strange user-agent strings . Clusters of clicks from similar device models or OS versions that don’t match your typical audience profile warrant investigation .

Geo mismatch: Paid clicks concentrated in locations that do not match customer distribution or serviceability .

3.3 Conversion Path Anomalies

Form abandonment patterns: Form starts without completes, repeated short sessions, or identical dwell times .

Lead validation fallout: Higher percentage of unreachable phone numbers, duplicate submissions, or “nonsense” form fields .

3.4 Account-Level Indicators

Search campaigns: Sudden growth in long-tail query volume with weak post-click engagement and inconsistent lead quality .

Display and video: Placement-level spikes with low incremental conversion value and high bounce or “single page” sessions .

PMax campaigns: Performance volatility where spend ramps but conversion quality drops, especially when you lack strong offline conversion feedback .

Affiliate/referral-heavy funnels: Inflated assisted conversion paths and suspiciously consistent timing patterns .

Part 4: Platform-Specific Risk Levels

Not all advertising platforms face equal fraud risk. The 2026 Global Invalid Traffic Report from Lunio analyzed over 2.7 billion paid ad clicks and revealed stark differences :

| Platform | Invalid Traffic Rate |

|---|---|

| TikTok | 24.2% |

| 19.88% | |

| X/Twitter | 12.79% |

| Bing Ads | 10.32% |

| Meta (Facebook/Instagram) | 8.2% |

| Google Ads Search | 7.57% |

Lead-generation industries—including banking, financial services, insurance (BFSI), telecom, and education—face 32% higher invalid traffic than e-commerce, while gaming and iGaming are most affected overall .

These numbers don’t mean you should abandon high-risk platforms. They mean you must apply stricter monitoring, verification, and protection to campaigns running there.

Part 5: Building a Fraud Protection Strategy

Effective fraud protection is not a single tool or tactic—it’s a multi-layered, continuously evolving approach .

5.1 Establish a Clean Baseline

Define what “normal” looks like for each campaign variable :

Click-through rates

Conversion rates

Session duration

Geographic distribution

Device mix

IP and ASN patterns

Collect full telemetry for every click: timestamp, IP, user agent, referrer, landing page, and conversion path . Without this baseline, you cannot identify anomalies when they occur.

5.2 Implement Real-Time Detection

Feed telemetry into an analytics engine that automatically flags anomalies in real time . Look for:

Spikes in clicks from a single ASN

Repeating click patterns

Impossible conversion timings

Real-time detection is the foundation of bot blocking, preventing invalid traffic from affecting ad spend or performance data .

5.3 Use IP and Device Fingerprinting

Track repeat offenders by monitoring :

IP addresses and ASNs

Device fingerprints

Referrer chains

Cookie behavior

Maintain dynamic blocklists of known malicious IP addresses, including proxy networks and data center clusters typically associated with fraud . The Fraudlogix IP Blocklist, for example, tracks over 30 million high-risk IPs with hourly updates .

5.4 Move Beyond JavaScript-Only Detection

Critical 2026 update: AI-assisted SIVT is now actively bypassing JavaScript-based fraud detection . If your click defense depends on client-side JavaScript signals alone, you may be paying for invalid activity that never surfaces in your dashboards .

The fix is a layered approach that makes fraud expensive at multiple layers—acquisition, click, session, and lead validation .

5.5 Implement Server-Side Validation

Ensure you have server logs and event integrity checks that do not depend on the browser environment . JavaScript can be faked; server-side patterns are harder to spoof at scale .

5.6 Create Operational Response Playbooks

Document response procedures for different severity levels :

| Severity | Response |

|---|---|

| Low confidence anomalies | Alert + observe |

| Confirmed attacks | Block + pause affected campaigns |

| Sustained competitor assaults | Escalate + report to platforms, consider legal consultation |

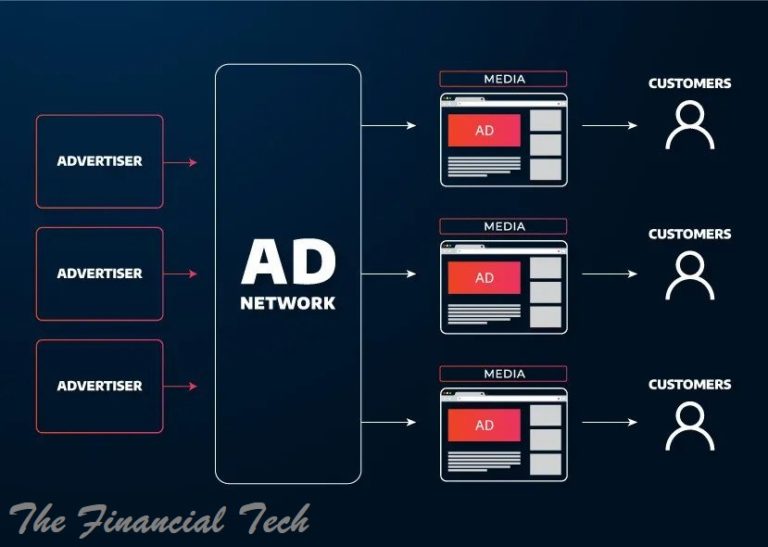

5.7 Collaborate with Ad Networks

Many advertisers underestimate their ability to influence traffic quality on advertising platforms . Effective steps include:

Blacklisting placements or audience groups with high IVT rates

Using more stringent geo-targeting and demographic restrictions

Curtailing access to audience networks or poor third-party placements

Reporting fake traffic to ad networks for investigation

5.8 Segment and Isolate Risk

Split high-risk inventory (Display, some partner traffic) into separate campaigns with capped budgets and stricter targeting . This prevents low-quality sources from cannibalizing high-intent Search budgets.

5.9 Implement Lead Quality Feedback Loops

Pipe “bad lead” signals back into bidding and exclusions . If you cannot mark low-quality leads reliably, you are training algorithms to buy more of them.

5.10 Continuous Monitoring and Revision

Bot networks evolve rapidly. Statistical rules become outdated quickly . Sustainable strategy requires :

Constantly updated threat intelligence

Adaptive machine-learning models

Periodic traffic quality checks

Automatic detection of sudden anomalies or spikes

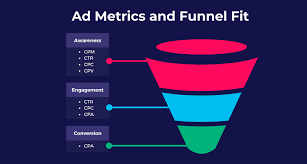

Part 6: Measurement and KPIs

Track these metrics to understand the value of your fraud protection efforts :

| KPI | What It Measures |

|---|---|

| Invalid click rate (IVR) | Percentage of clicks marked as invalid |

| Spend recovered | Refunded amounts after platform disputes |

| Conversion cost reduction | Impact of blocks on cost per conversion |

| IPs blacklisted | Number of confirmed malicious sources blocked |

| Conversion rate improvement | Cleaner traffic drives higher true conversion rates |

| CPA/ROAS improvement | Removing fraud improves cost efficiency |

These metrics validate that fraud mitigation efforts are delivering tangible ROI .

Part 7: Platform Reporting and Legal Options

When you identify indicators of a potential competitor-driven attack or sustained fraud operation, a structured response is essential .

7.1 Documentation

Collect and safeguard necessary logs and data :

Timestamps and click IDs

IP address ranges

User agents

Traffic flow patterns

Anomaly detection outputs

In environments where privacy regulations apply, ensure personally identifiable information is properly anonymized without compromising data integrity .

7.2 Platform Reporting

Submit official reports concerning observations and suspicions of invalid activity directly to platforms using their designated reporting mechanisms . Well-documented reports are more likely to receive serious consideration and remediation .

7.3 Legal Consultation

If facing repeat or obviously fraudulent invalid activity, consider consulting an attorney early to evaluate potential liability and legal steps . In some jurisdictions, persistent click fraud by a rival could potentially give rise to contractual or even criminal liability .

Part 8: Emerging Threats for 2026 and Beyond

8.1 AI-Powered Fraud

Fraudsters now use machine learning to create more human-like bot behavior and evade detection . AI-assisted SIVT is specifically designed to bypass JavaScript-based detection by generating sessions that look “human” to client-side checks while still being non-human at the source .

8.2 Mobile Proxy Abuse

Routing fraudulent traffic through residential and mobile IP addresses is one of the fastest-growing fraud vectors . This makes detection significantly harder because traffic appears to originate from real consumer devices.

8.3 Device-Level Malware

The PEACHPIT botnet, discovered in 2026, infected devices through 39 counterfeit apps downloaded more than 15 million times from major app stores . The botnet reached a peak of 121,000 Android devices and 159,000 iOS devices daily across 227 countries . Infected devices contained modules responsible for creating hidden WebViews used to request, render, and click on ads, masquerading the activity as originating from legitimate apps .

8.4 Agentic AI Traffic

Industry experts warn that agentic AI traffic will further blur the line between genuine and synthetic engagement . As AI agents begin shopping on behalf of consumers, distinguishing legitimate automated activity from fraud will become increasingly complex.

8.5 CTV and OTT Fraud Growth

As ad spend shifts to connected TV, fraud follows. CTV’s high CPMs make it an attractive target, and the fragmented nature of the ecosystem creates detection blind spots .

Part 9: The Cost of Complacency

The damage caused by bot traffic extends far beyond wasted clicks. Its effects ripple across the entire advertising funnel :

Wasted advertising budget: Every invalid click consumes budget that could have been spent reaching real prospects . Over time, these losses compound.

Distorted analytics and reporting: Bot traffic inflates impressions, sessions, and clicks while distorting engagement metrics . This makes it difficult to accurately evaluate campaign performance.

Misguided optimization decisions: Modern ad platforms rely heavily on machine learning and automated optimization. When bots contaminate performance data, algorithms are trained on false signals, leading to poor bidding, targeting, and placement decisions .

Reduced confidence in data: When advertisers cannot trust their analytics, strategic decision-making becomes reactive rather than data-driven, undermining growth initiatives .

Model pollution: The biggest cost is often indirect—automated bidding can shift budget toward the very traffic sources that are exploiting you .

In an environment where the average campaign may experience more than 10% invalid traffic, advertisers that actively protect their campaigns gain a meaningful competitive advantage .

Conclusion: From Reactive Defense to Operational Advantage

Ad fraud is not a problem that can be solved once and forgotten. It evolves continuously, using rotating IPs, proxy networks, AI-powered behavior, and device-level malware designed to bypass one-time checks .

The key principles of effective fraud protection:

Detection must be continuous, not periodic

Protection must be layered, combining IP blocking, device fingerprinting, behavioral analysis, and server-side validation

Response must be automated, with real-time blocking before fraud impacts budgets

Strategy must evolve, adapting to new fraud tactics and threat intelligence

The advertisers who succeed will be those who treat fraud protection as an operational advantage rather than a defensive afterthought . By embedding detection into daily operations, maintaining clean data signals, and using automated tools to block malicious sources before damage occurs, advertisers regain control over spend, data integrity, and long-term campaign scalability .

The question is no longer whether fraud affects your campaigns. It’s whether you’re doing enough to stop it.

Every day you delay implementing proper protection, a portion of your budget—potentially 10% or more—is funding criminal enterprises rather than acquiring customers. In a world where margins are tight and competition is fierce, that’s not just wasted money. It’s a competitive disadvantage you can no longer afford.

OTHER POSTS